Jean Creusefond

Vendredi 13 mai 2016 à 11h, salle 24-25/405

Slides

Cet exposé sera en deux parties relativement indépendantes : l’évaluation des structures communautaires par le biais des vérités de

terrain et l’analyse des l’appartenance communautaire des motifs dans les flux de liens

L’évaluation de structures communautaires de manière théorique est très

délicate : de multiples propriétés structurelles sont considérées comme

importantes, par conséquent considérer une structure comme meilleure

qu’une autre implique des choix arbitraires sur ces préférences,

matérialisé par le choix d’une fonction de qualité ou de benchmarks.

Afin d’éviter ces problèmes, beaucoup de chercheurs évaluent maintenant

leurs résultats par comparaison avec des structures communautaires

extraites en même temps que des jeux de données, en argumentant que la

proximité entre leurs résultats et la vérité de terrain est une preuve

significative de pertinence.

Dans cette partie, je vais discuter d’une méthodologie permettant de

concilier les deux approches et d’identifier quelles vérités de terrain

favorisent quelles fonctions de qualité.

Je soulignerai notamment le choix de la fonction de comparaison de

partitions, souvent considéré comme anodin, mais changeant en fait

radicalement les résultats.

Pour référence, le programme développé (incluant un grand nombre

d’algorithmes de détection de communautés et de fonctions de qualité)

est entièrement disponible à l’adresse suivante : https://codacom.greyc.fr/

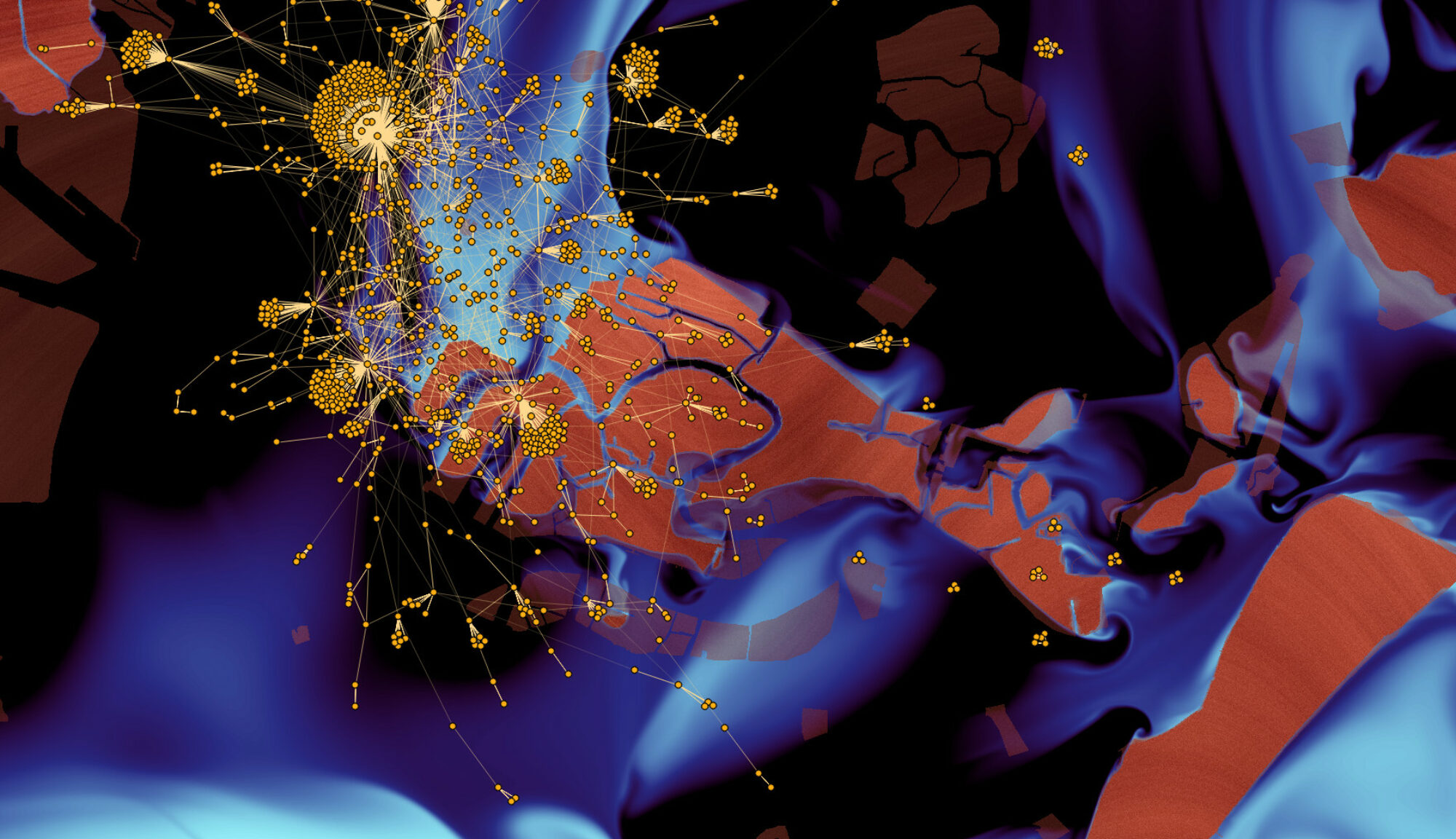

En seconde partie, je discuterai de travaux en cours d’analyse de flots

de liens : des graphe dont chaque arc est étiqueté par un temps et où

les multiarcs sont possibles.

Les flots de liens qui nous intéressent ici représentent des réseaux de

communication, c’est-à-dire que chaque arc représente une interaction

orientée entre deux utilisateurs.

Fréquemment, les algorithmes de détection de communautés qui tentent de

les analyser agglomèrent le réseau de communication sur des fenêtres

temporelles, où des méthodes traditionnelles (ou adaptées) peuvent êtres

appliquées.

Dans ce cas, une information est perdue : la causalité entre les liens.

Par exemple, si un ensemble de personnes ont systématiquement la même

structure de communication (ex : quand « A » interagit avec « B », celui-ci

intéragit ensuite systématiquement avec « C » et « D »), peut-on en déduire

la structure communautaire associée?

Afin d’évaluer l’impact de cette information, je me suis intéressé aux

motifs : des chaînes de communication dont la causalité semble probable

(la première interaction a probablement entraîné la suivante, etc.).

Le lien entre ces motifs et la structure communautaire reste donc à

analyser, et je présenterai les outils mis au point à ce dessein ainsi

que quelques résultats préliminaires.