Romain Hollanders, Daniel Bernardes, Bivas Mitra, Raphael Jungers, Jean-Charles Delvenne, Fabien Tarissan.

In Journal of Network Science, 2(3):341-266, Cambridge University Press, 2014.

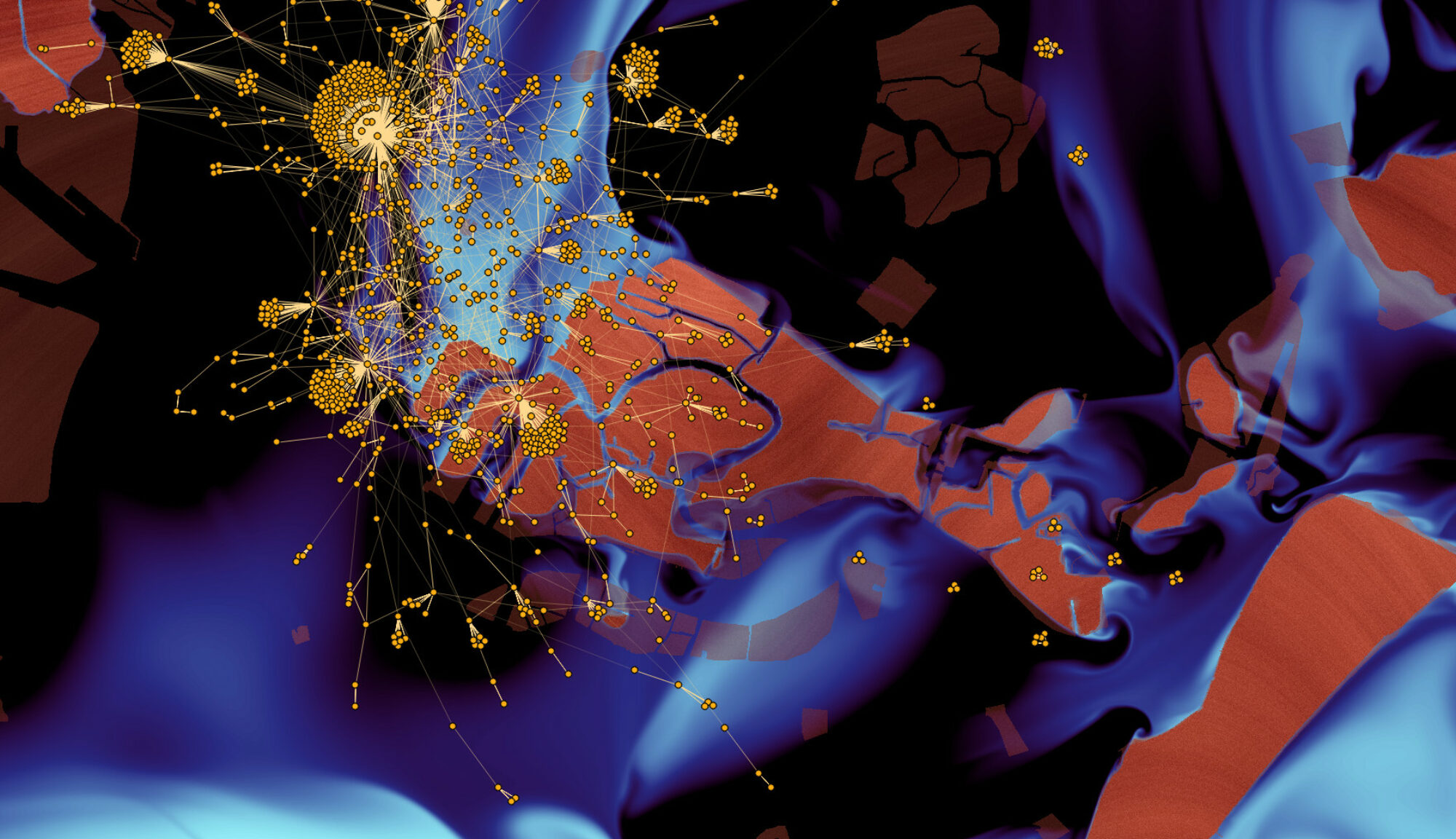

Peer-to-peer (p2p) systems have driven a lot of attention in the past decade as they have become a major source of Internet traffic. The amount of data flowing through the p2p network is huge and hence challenging both to comprehend and to control. In this work, we take advantage of a new and rich dataset recording p2p activity at a remarkable scale to address these difficult problems. After extracting the relevant and measurable properties of the network from the data, we develop two models that aim to make the link between the low-level properties of the network, such as the proportion of peers that do not share content (i.e., free riders) or the distribution of the files among the peers, and its high-level properties, such as the Quality of Service or the diffusion of content, which are of interest for supervision and control purposes. We observe a significant agreement between the high-level properties measured on the real data and on the synthetic data generated by our models, which is encouraging for our models to be used in practice as large-scale prediction tools. Relying on them, we demonstrate that spending efforts to reduce the amount of free-riders indeed helps to improve the availability of files on the network. We observe however a saturation of this phenomenon after 65% of free-riders.